A BETTER METHOD TO RECALCULATE NORMALS IN UNITY

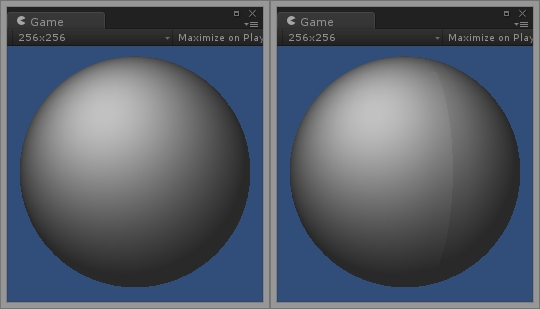

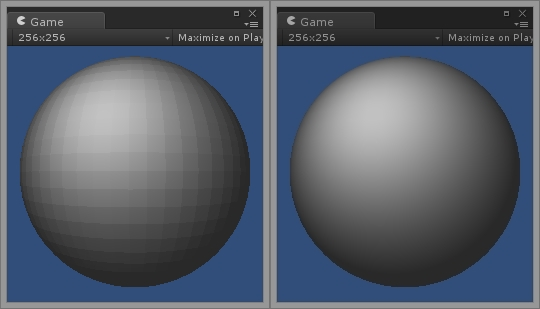

A visible seam showing after recalculating normals in runtime.

You might have noticed that, for some meshes, calling Unity’s built-in function to RecalculateNormals(), things look different (i.e. worse) than when calculating them from the import settings. A similar problem appears when recalculating normals after combining meshes, with obvious seams between them. For this post I’m going to show you how RecalculateNormals() in Unity works and how and why it is very different from Normal calculation on importing a model. Moreover, I will offer you a fast solution that fixes this problem.

This article is also very useful to those who want to generate 3D meshes dynamically during gameplay and not just those who encountered this problem.

Some background…

I’m going to explain some basic concepts as briefly as I can, just to provide some context. Feel free to skip this section if you wish.

Directional Vectors

Directional vectors are not to be confused with point vectors, even though we use exactly the same representation in code. Vector3(0, 1, 1) as a point vector simply describes a single point along the X, Y and Z axis respectively. As a directional vector, it describes the direction we have when we stand at the origin point (Vector3(0, 0, 0) ) and look towards the point Vector3(0, 1, 1) .

If we draw a line from Vector3(0, 0, 0) towards Vector3(0, 1, 1) , we will notice that this line has a length of 1.414214 units. This is called the magnitude or length of a vector and it is equal to sqrt(X^2 + Y^2 + Z^2). A normalized vector is one that has a magnitude of 1. The normalized version of Vector3(0, 1, 1 ) is Vector3(0, 0.7, 0.7) and we get that by dividing the vector by its magnitude. When using directional vectors, it is important to keep them normalized (unless you really know what you’re doing), because a lot of mathematical calculations depend on that.

Normals

Normals are mostly used to calculate the shading of a surface. They are directional vectors that define where a surface is “looking at”, i.e. it is a directional vector that is pointing away from a face (i.e. a surface) made up of three or more vertices (i.e. points). More accurately, normals are actually stored on the vertices themselves instead of the face. This means that a flat surface of three points actually has three identical normals.

A normal is not to be confused with a normalized vector, although normals are normalized – otherwise, light calculations would look wrong.

How Smoothing works

Smoothing works by averaging the normals of adjacent faces. What this means is that the normal of each vertex is not the same as that of its face, but rather the average value of the normals of all the faces it belongs to. This also means that, in smooth shading, vertices of the same face do not necessarily have identical normals.

A sphere with flat and smooth shading.

How Unity recalculates normals in runtime

When you call the RecalculateNormals() method on a mesh in Unity, what happens is very straightforward.

Unity stores mesh information for a list of vertices in a few different arrays. There’s one array for vertex positions, one array for normals, another for UVs, etc. All of these arrays have the same size, and each information of one index in the array represent one single vertex. For example, mesh.vertices[N] and mesh.normals[N] are the position and the normal, respectively, of the Nth vertex in our list of vertices.

However, there is a special array called triangles and it describes the actual faces of the mesh. It is a sequence of integer values, and each integer is the index of a vertex. Each three integers form a single triangle face. This means that the size of this array is always a multiple of 3.

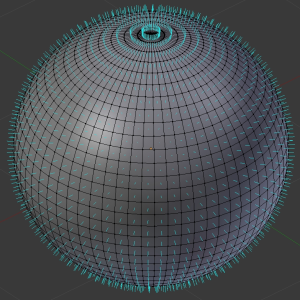

As a side note: Unity assumes that all faces are triangles, so even if you import a model with faces having more than three points (as is the case with the sphere above), they’re automatically converted, upon importing, to multiple smaller faces of three vertices each.

The source code of RecalculateNormals() is not available, but, guessing from its output, this pseudo-code (sloppily mixed with some real code) follows exactly the same algorithm and produces the same result:

1 | initialize all normals to (0, 0, 0) |

Notice how I don’t explicitly average the normals, but that’s because CalculateSurfaceNormal(…) is assumed to return a normalized vector. When normalizing a sum of normalized vectors, we get their average value.

How Unity calculates normals while importing

The exact algorithm Unity uses in this case is more complicated than RecalculateNormals() . I can think of three reasons for that:

- This algorithm is much slower to use, so Unity avoids calling that during runtime.

- Unity has more information while importing.

- Unity combines vertices after importing.

The first reason is not the real reason because Unity could have still provided an alternative method to calculate normals in runtime that still performs fast enough. The second and third reasons are actually very similar, since they both come down to one thing: After Unity imports a mesh, it becomes a new entity independent from its source model.

During mesh import, Unity may consider shared vertices among faces to exist multiple times; one time for each face.This means that when importing a cube, which has 8 vertices, Unity actually sees 36 vertices (3 vertices for each of the 2 triangles of each one of the 6 sides). We will refer to this as the expanded vertex list. However, it can also see the condensed 8 vertex list at the same time. If it can’t, then I’m guessing it first silently builds that structure simply by finding which vertices are at the same position as other vertices.

As you saw in the previous section, smoothing in RecalculateNormals() only works when vertices at the same position are assumed to be one and the same. With this new data at hand, a different algorithm is to be used. In the following pseudo-code, vertex represents a vertex from the condensed list of vertices and vertexPoint represents a vertex from the expanded list. We also assume that any vertexPoint has direct access to the vertex it is associated to.

1 | threshold = ? |

As it turns out, the final result contains neither the expanded vertex list nor the condensed vertex list. It is an optimized version which takes all the identical vertices and merges them together. And by identical, I don’t mean just the position and normals; it also also takes into account all the UV coordinates, tangents, bone weights, colors, etc – any information that is stored for a single vertex.

The problem explained

As you may have guessed from the previous section, the problem is that vertices that differ in one or more aspects are considered as completely different vertices in the final mesh that is used during runtime, even if they share the same position. You will most likely encounter this problem when your model has UV coordinates. In fact, the first image of this article was produced precisely by a model with a UV seam, after RecalculateNormals() was called.

I will not get into many details about UV coordinates, but let me just say that they’re used for texturing, which means it is actually a very common problem; if you ever recalculate normals at runtime then this situation is bound to appear sooner or later.

You can also see this problem if you’re merging two meshes together – say, two opposing halves of a sphere – even if they have identical information. That’s because when merging two meshes, what actually happens is that we simply append the data of one mesh on top of another, which leads common vertices between the two to be distinct in the final mesh.

The technical cause behind the problem is, as we have pointed out, that RecalculateNormals() does not know which distinct vertices in our list have the same position as others. Unity knows this while importing, but this information is now lost.

This is also the reason RecalculateNormals() does not take any angle threshold as a parameter, contrary to calculating normals during import time. If I import a model with a 0° tolerance, then it will be impossible to have any smooth shading during run-time.

My solution

My solution to this problem is not very simple, but I have provided the full source code. It is somewhat of a hybrid solution between the two extremes used by Unity. I use hashing to cluster vertices that exist in the same position to achieve a nearly linear complexity (based on the number of vertices in the expanded list) to avoid a brute-force approach. Therefore, it is fast and asymptotically optimal. However, it does allocate a bunch of temporary memory which might cause a bit of a lag.

Usage

By adding my code to your project (found at the end of this article), you will be able to recalculate normals based on an angle threshold:

1 | var mesh = GetComponentInChildren().mesh; |

As you probably know, comparing equality between two floating numbers is a bad practice, because of the inherent imprecision of this data type. The usual approach is to check whether the floats have a very small difference between them (say, 0.0001), but this is not enough in this case since I need to produce a hash key as well. I use a different approach that I loosely call “digit tolerance”, which compares floats by converting them to long integers after multiplying them with a power of 10. This method makes it very easy for Vector3 values to have identical hash codes if they’re identical within a given tolerance.

My implementation multiplies with the constant 100,000 (for 5 decimal digits), which means that 4.000002 is equal to 4.00000. The tolerance is rounded, so 4.000008 is equal to 4.00001. If we use a number that is either too small or too large then we will probably get wrong results. 100,000 is a good number, you will rarely need to change that. Feel free to do so if you think it’s better.

Things to be aware of

One thing to keep in mind is that my solution might smoothen normals even with an angle higher than the threshold; this only happens when we try to smoothen with an angle less than the one specified by the import settings. Don’t worry though, it is still possible to get the right result.

I could have written an alternative method that splits those vertices at runtime, or one that recreates a flat mesh and then merges identical vertices after smoothing. However, I thought that this was much bigger trouble than what it was worth and it would kill performance.

To achieve a better result, you can import a mesh with a 0° tolerance. This will allow you to smooth normals at any angle during runtime. However, since a 0° tolerance produces a larger model, you can also just import a mesh with the minimum tolerance you’re ever going to need. If you import at 30°, you can still smooth correctly at runtime for any degree that is higher than 30°.

In any case, a 60° tolerance is good for most applications. You can use that for both import and runtime normal calculation.

Edit 25/03/2017:

The code has been updated, so the old code has been omitted from this post. You can find the new code here!

Happy smoothing!

A BETTER METHOD TO RECALCULATE NORMALS IN UNITY – PART 2

It’s been over 2 years since I posted about a method to recalculate normals in Unity that fixes on some of the issues of Unity’s default RecalculateNormals() method. I’ve used this algorithm (and similar variations) myself in non-Unity projects and I’ve since made minor adjustments, but I never bothered to update the Unity version of the code. Someone recently reported to me that it fails when working with meshes with multiple materials, so I decided to go ahead and update it.

The most important performance update is that I now use FNV hashing to encode the vertex positions. The first implementation used a very naive hashing which would have many hashing collisions. A mesh with approximately a million vertices would end up with a significant amount of collisions, which was a performance killer.

Using FNV hashing reduced collisions to a negligible amount, even to 0 in most cases. I don’t have the figures now, as this change happened about a year ago, but I might do some newer measurements later (read: probably never).

To summarize, here are the changes:

- Fixed issue with multiple materials not working properly.

- Changed VertexKey to use FNV hashing.

- Other (minor) performance improvements.

And I know you’re here just for the code, so here it is:

1 | /* |

After you include this code in your project, all you have to do is call RecalculateNormals(angle) on your mesh. Make sure you visit the original post for additional information about the algorithm.

A final note

This is not the most optimal way of doing this. It’s meant for one-off calculation only. If you do this over and over again you’d better use some sort of acceleration structure where you can query nearby vertices quickly (if the faces are expected to change) or build some kind of structure which contains all the proper neighbours per vertex.